Live Streaming Program Documentation

General Overview(OLD)

直播技术方案演进

Live Stream Setup Overview

本文档使用 MrDoc 发布

-

+

首页

Live Stream Setup Overview

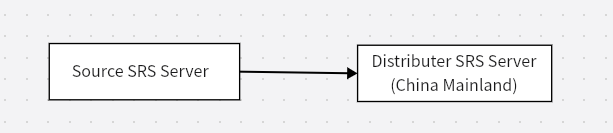

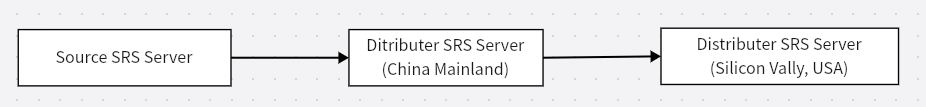

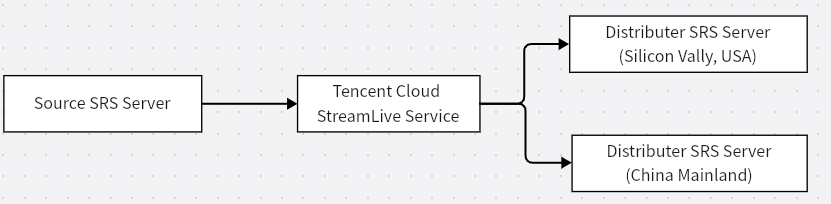

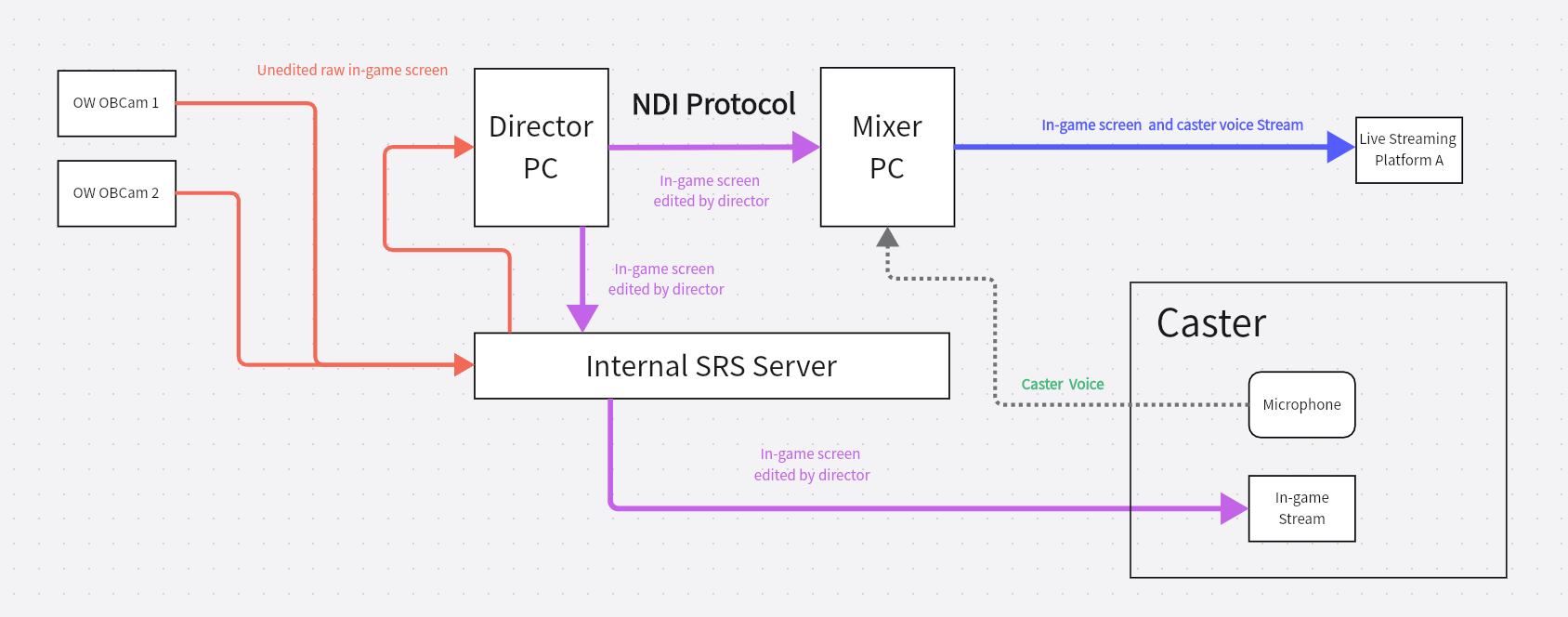

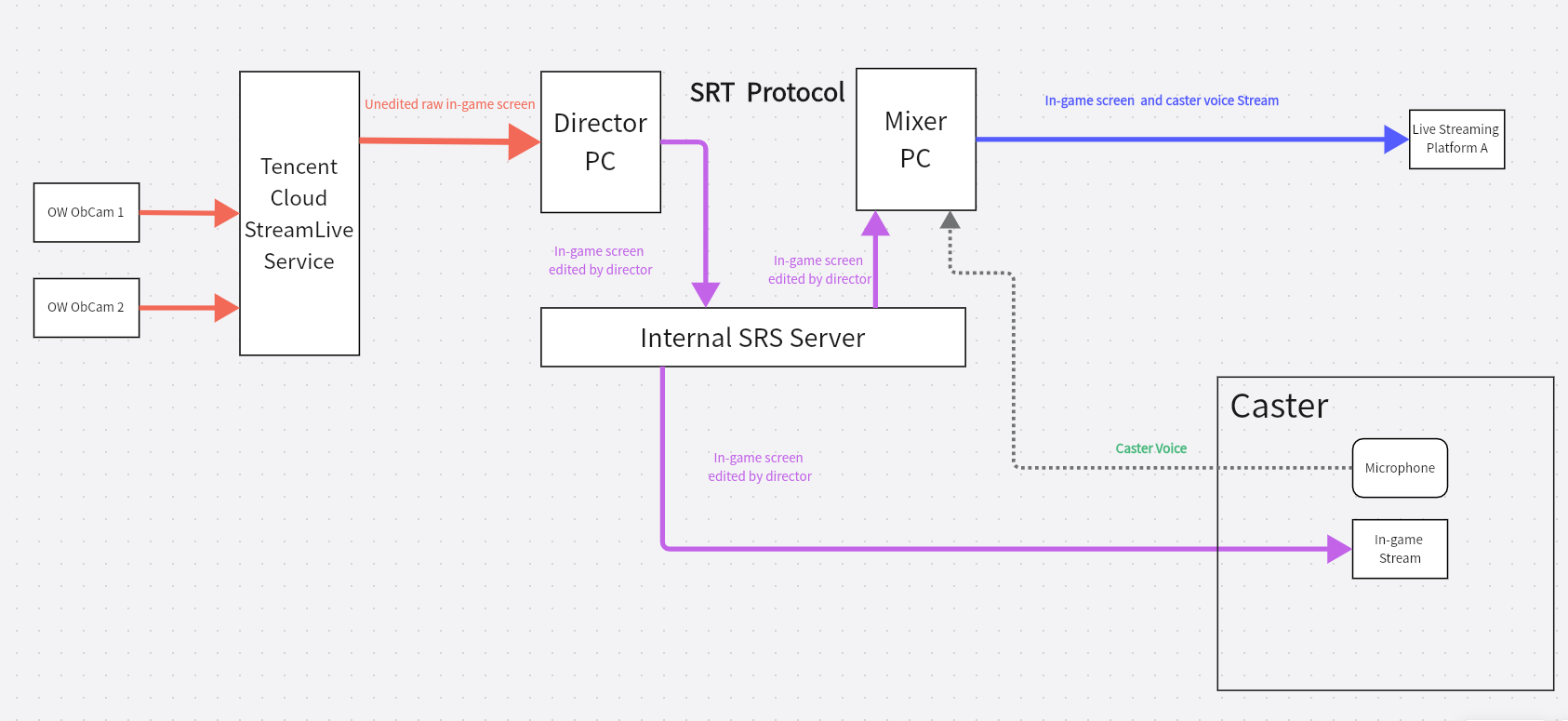

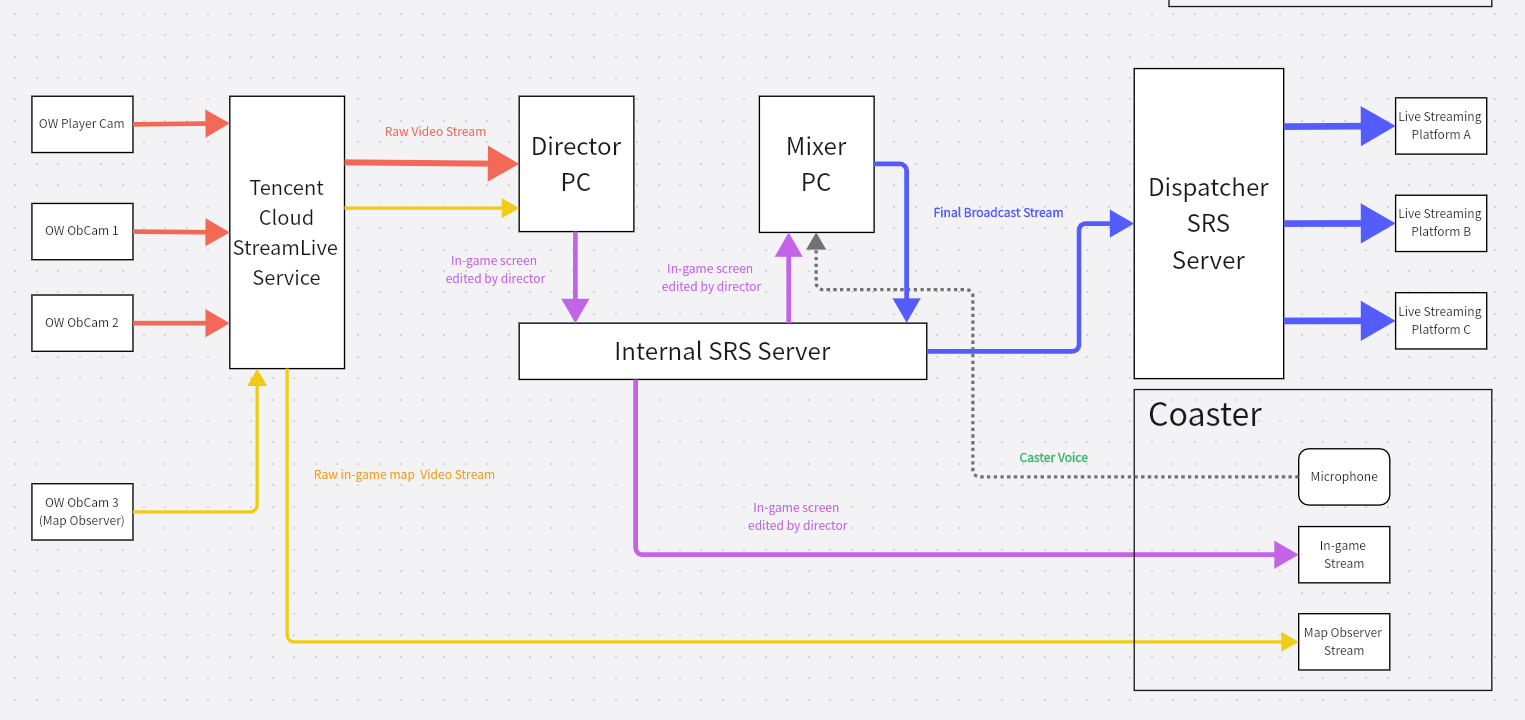

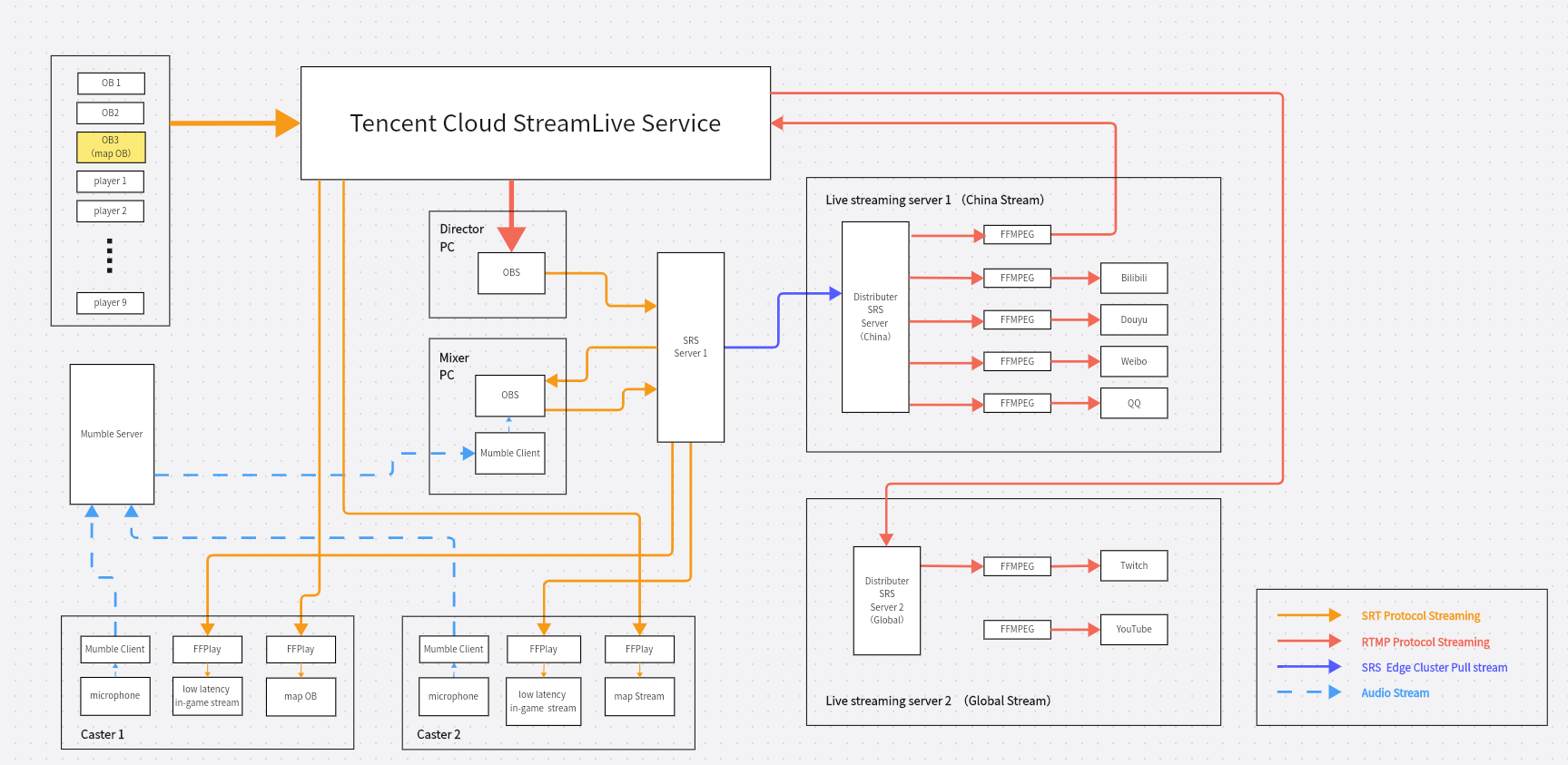

# Foreword This is the information compiled by the PS2CPC livestreaming team when organizing the stream platform for the Planetside 2 OW Nexus season 1 livestreaming. We do **not** have sufficient sources of funds, **nor** we are professional directors, casters, developers or relevant practitioners in the live broadcast industry. Since all our team members do not have a professional background, and most of us (including me who wrote this article) do not have a high level of English skills, most of the resources we obtained come from the open platform on the Internet. The solution we have determined may **not** be the most reasonable one, but it is the one that we believe can meet our needs most after testing and push on live. Based on the reasons we mentioned above, the focusing points of our plan are: 1. Reduce the cost as much as possible and it’s useable on our hardware and software. 2. Since we are not a LAN team, all the works must be able to be done online. 3. The staff for each stream (such as Caster/Co-caster, OB cam user and Player cam) are fluid, so we had to simplify the process and reduce configuration requirements The plan/solution we mentioned in this article are still being updated. In fact, we are constantly walking a fine line between identifying problems, solving problems and compromising on them. The best we can do is try to improve it as long as we have the time and need to, and the process of improving the solution will be slow and continuous. # The stream solution ## Brief description PS2CPC's stream solution is different from most individual streamers. Here is a brief introduction to our solution, so that you can understand some of the information provided that looks strange but in fact are necessary. ### The streamers setup #### 1. OBcam users These are the people who provide the in-game views. In the current scenario, we have three OBcam users. But two of them are focusing on chasing the competitive cam from the match, and an extra OBcam provide the in-game map. The map guy can provide the director and casters a clear and detailed map, while he/she can also guide the other two OBcams go to somewhere else on the map to capture a back-cap or some large fight from different angle. We know that there are other community devs and program (honu, PS2Alerts, for example) that offer a Web app that shows the status of map. Their scripts provide kill count comparisons and territory capture information through API, which makes up for the lack of in-game information. We really admire what they've done with it and very happy to use it. But we ended up turning off the script's map for a simple reason: the in-game map are more real-time and informative. #### 2. Player cam The player cams are used to fill in the gaps when OBcam are on the way going to a new position, as well as to provide better access to the ongoing battle, providing viewers more detailed battle. Ideally, each team would have three player cams available to the director, from the leader/air/infantry or armor/air/infantry players from the team. #### 3. Casters You know what they do, in most of the PSB streams they are also the person who use the OBcam. But instead of doing that, we rather arrange independent OBcam users because this can help what we gonna talk next, the director’s work. #### 4. Director and Director Assistant What the director has is the control of all the cams. Director is responsible for monitoring all the cams provided to him and deciding which cams to switch and provide to casters. At the same time, the director also needs to listen what casters are talking about and respond to casters’ requirements (on where the OBcam should go), and give order to OBcams. Director assistant basically just help director for what he need to do, to decrease the stress and reduce mistakes. #### 6. Other staff and tech support. These staffs’ task is to arrange the casters and cams and hardware/software preparation, they will not participate the stream. ## Stream structure ### Problems There are some problems we need to solve: 1. How to make the director obtain 9 cams stably? (3 OBcams, 6 Playercams) 2. How can we provide casters a stable and low latency view on the cam? 3. How can we push the stream to multiple platform? ### Solutions #### 1. How to make the director obtain 9 cams stably? (3 OBcams, 6 Playercams) The answer is simple, we need a real-time video server to do this. Due to the restrictive network conditions in mainland China and the unstable network connections across different internet providers, it was difficult for us to handle this with ordinary home broadband. But If you are not a mainland China, you may have more options and might even consider setting up your own local live stream service to solve this problem. After testing, we finally chose a solution with two real-time video servers. - a paid stream server solution: [Tencent Cloud StreamLive Service](https://www.tencentcloud.com/products/mdl)。 - Tencent Cloud has access/input from multiple internet provider and well-developed CDN support, which can help us acquire and distribute stable video streams. - Tencent Cloud also has cloud recording, stream management and other related functions that help us manage and debug, which is less time cost than we do it on our own. > If you are not in mainland China, similar alternatives to Tencent Cloud may include [Amazon AWS Live Streaming](https://aws.amazon.com/en/solutions/implementations/live-streaming-on-aws/) or more, which requires you to find and test by themselves. - a free open-source platform [SRS](https://ossrs.io/lts/en-us/)。 - Although its open-source community are smaller, it provides relatively clear using cases/plans, making it easier for those who not have relative knowledge to deploy and use - Its overall performance is pretty good, which helps us reduce the cost. In fact, **SRS** and **Tencent Cloud StreamLive Service** became the cornerstone of our entire solutions, especially SRS, which impressed us with its ease of use and complete functionality, which is why we promoted (and thanked) this open source project in our live stream. #### 2. How can we provide casters a stable and low latency view on the cam? we think the answer is to use the [SRT Protocol](https://en.wikipedia.org/wiki/Secure_Reliable_Transport). According to our search from the internet, SRT performs much better than RTMP in terms of latency. Through our test, we finally choose the solution: set up **SRS** service in a LAN where the director is, and let the caster use **FFPlay** to pull the SRT stream to watch directly. The cost of this solution was that it would take up some of our already limited uplink bandwidth, but because it worked perfectly for us and solved some strange side issues, we didn't need to change it for the time being. During the third week’s OW stream, MoTong pull the stream in Perth, Australia from our location in Fujian, China, to participate in the casting. And we do encounter a higher frequency of screen stutters during the casting, but the co-caster in mainland China did not have such issue. So overall this is acceptable.  #### 3. How can we push the stream to multiple platform? The answer for it is a big enough uplink bandwidth, and **FFMPEG**. We mentioned it above that we're just using regular home broadband, which, while having decent downlink speeds (1000 Mbps), it only has an uplink bandwidth of around 30 Mbps. Two SRT streams are provided for caster and co caster, each at 8 Mbps, and the PC used to actually push the stream online (the PC provide the SRT stream and the PC do the twitch/bilibili stream are two different PC used by director) outputs 8 Mbps video streams, so we can only push one video stream out. It's this simple math problem that has caused us a lot of trouble. In fact, when we were streaming, an unexpected device took up some uplink bandwidth, which caused a problem to the online stream PC, and eventually caused all stream platforms to be stuck for several minutes. In this case, local multi-push streaming was already impossible. To solve this problem, we turned our eyes to **SRS**. As we mentioned earlier, we deployed an SRS server on the Lan to solve the problem of SRT stream. This time, we will take advantage of its [Edge cluster](https://ossrs.io/lts/en-us/docs/v4/doc/edge) feature to deploy an external SRS server and use our previously deployed LAN SRS server as the source site. For technical details, you can read the link above. For those who have not read it, the SRS server on the LAN is referred to as the 【source server】, and the new SRS server deployed externally is referred to as the 【distributer server in Mainland China】. The Edge cluster of SRS has one significant advantage: We don't need to make any changes to the configuration of the source server, and the 【distribution site in Mainland China】 will be corresponding replication and caching operations to the SRT stream, the benefits of this sample is no matter how many clients request this stream from【distributer server in Mainland China】,【distributer server in Mainland China】will only get one stream source from【source server】. This feature just solves our purpose of "only push one stream source, but we want to live on multiple platforms", so we buy enough uplink bandwidth for this【distributer server in Mainland China】, run multiple FFMPEGs, pull the RMTP stream and push it to other platforms.  The special thing is that since we are in mainland China, there is no way to use a mainland China server to push stream directly to Twitch and YouTube. And there's no way to push stream to the Chinese stream platform such as Bilibili, Douyu, Weibo, QQ Channel and Douyin from servers located outside of mainland China. (Douyin and TikTok operate independently of each other, even though they are ByteDance products and have highly similar functions.) So we added an additional 【distributer server in Silicon Valley】, which is based on the 【distributer server in Mainland China】 we mentioned above. How they works are like greedy snakes, the data flow direction is 【source server】→【distributer server in Mainland China】→【distributer server in Silicon Valley】.  But after a network fluctuation we realized that there is a potential risk for us to do the data transition like this in mainland. Therefore, in order to solve this problem in a short time, we finally changed the structure to 【source server】→【distributer server in Mainland China】→【Tencent Cloud StreamLive Service】→【distributer server in Silicon Valley】.  > “You may have a question that why we don’t use a structure like this:【source server】→【Tencent Cloud server】→【distributer server in Silicon Valley】.and 【distributer server in Mainland China】”  Because we're worried about two things: 1. By the time we aware of the problem, we were less than two hours away from the start of Soltech OW Week 4 stream, and the tech support guy who was able to set it up at the time (who is me) was responsible for checking the working status of all the stream servers, We also had to coordinate the setup and check of 3 OB cams and 6 player cams. There was not enough time to verify that if this workflow would lead to serious stream accidents caused by various configuration errors. 2. We had a really weird problem in our testing that we haven't figured out yet: When the stream of our【source server】 is pushed to【Tencent Cloud server】via FFMPEG, there will be a higher frequency of the lower half of the screen blurred if we then pulled it by FFMPEG, and finally stream it after doing visual-vocal mixing and more video processing. This problem has become a big concern for us. Based on the above reasons, we have made the decision to bet that the screen blurred of overseas platforms won’t be a big issue and guarantee the smooth stream on Chinese platforms first. Therefore, in the end, we choose to only change the relevant configuration of 【distributer server in Silicon Valley】 and add a FFMPEG instance to the server where 【distributer server in Mainland China】is located. After pushing the screen to 【Tencent Cloud StreamingLive service】, let 【distributer server in Silicon Valley】use the [Ingest](https://ossrs.io/lts/en-us/docs/v4/doc/ingest) function of SRS to cache and distribute more streams. We did observe the occasional screen blurred problem on overseas streaming platforms, however, after our discussion, we came to the conclusion that there is no way to improve the problem except to pay more for better servers. However, the problem may not be particularly serious for overseas livestreaming hosts, and we also consider the stream quality to be "acceptable". ### Our solution structure’s evolution process First, I'd like to highlight a few important points that will help explain some of our moves: 1. In comparison, we think SRT is a better choice than RTMP, SRT is preferred when it’s available. 2. When using SRS 4.0, you can either push an SRT stream to an SRS server or pull an SRT stream from the server. However, when SRS uses Edge cluster and Ingest, it seems **cannot** deliver SRT streams automatically. Instead, it converts them to an RTMP stream within SRS for distribution and delivery. 3. Based on the reason mentioned above, the reason we do not use SRT in the distribution process is that the SRS server currently only provides RTMP streams, not that we do not want to use them. We have contacted the SRS open source community and the core developers of the SRS project, and they have all mentioned improvements for future releases, but these releases are not currently "production-ready" and we do not have the time or energy to "risk" them. 4. The additional delay of a few seconds added to the distribution of the final image is considered acceptable, as the delay of PS2CPC stream is set to **7 minutes** to avoid leaking tactics or stream sniping. ##### 1. The original plan  This is the plan we designed at the beginning. Obviously, this solution is very similar to the individual streamers. The only special feature is the addition of an internal streaming Relay server (SRS), which we discussed above, to solve the problem of casters getting low-latency video streams. The main content is as follows:: 1. Two OBcams using **OBS** to collect in-game screen and push them directly to the Lan **SRS** server where the director is. 2. Director using **OBS** pulls the screen from the **SRS** server on the LAN and pushes the video stream that should be seen by the casters and the viewer to the **SRS** server on the Lan after editing. 3. At this point, the casters use the SRT protocol to pull the video stream output by the director for casting. 4. At the same time, the NDI script of **OBS** is used to push the screen directly to the stream PC to mix the audio information of the casters. 5. The stream PC pushes the stream (with in game screen and caster voice) to the target live streaming platform.。 I believe that it should be easy to understand the stream process. The core issues here are as follows: 1. As we mentioned above, the SRT latency is low enough that we can consider the audio from the caster’s microphone to be "in sync" with the actual picture, so we can get an acceptable synchronized stream without manual matching the voice lagging on the stream PC. 2. The NDI script for OBS implements the critical process. However, we found serious problems when using this solution: 1. Due to the problem of OBS NDI script, the CPU usage of the stream PC continues to be close to 100%, and the OBS is temporarily unresponsive in the most serious cases. It should be known that this stream PC are using a AMD Ryzen 5800X, which is definitely a powerful CPU. We can't explain why NDI is causing this problem, nor can we fix it. 2. Since we using house broadband, the OBcam directly pushes the stream to our Lan SRS have a low reliability, which may lead to problems or even disconnection of the screen. #### 2. Improved plan 1 In order to solve the problems in the original plan, we made a simple modification to the plan.  Our improvements are highly targeted: 1. In order to solve problem 1, we directly give up the NDI script for OBS and only used the built-in stream function of OBS. The transfer is carried out through the SRS server on the Lan server, which successfully solves the problem of severe CPU overload. 2. In order to solve problem 2, we no longer stream to our Lan SRS server directly, but use Tencent Cloud server to collect the original screen, which not only solves the problem of instability, but also supports more original stream input with greater confidence. Now we can meet the needs of the majority of people to stream on a single platform, which should be able to solve the problems encountered by most people technically. However, we have encountered non-technical problems in the process of streaming that need to be solved: 1. We need to push streams across multiple platforms instead of a single platform. 2. Due to the design of separation of caster and OBcam, and the fact that Soltech's server API was not fixed at that time, we could present very little information, the viewer and the caster could not know the situation of the whole battlefield, resulting in unsatisfactory steam performance. #### 3. Improved plan 2 In order to solve these non-technical problems, we have adopted further modifications to the solutions. The vast majority of these changes were working process rather than technical solution adjustments.  Our improvement points are mainly in the following two aspects: 1. We use the solution mentioned above to build a greedy snake style server based on SRS. 2. We applied for an extra OBcam, which was used as the map cam. Its specific role has been mentioned above. Technically, it pushes a map stream to Tencent Cloud, directly to the director and the caster. For the director, the delay is indistinguishable from any other slot; For caster, the map stream is even slightly ahead of the pure stream, but is sufficient for the purpose of observing the battlefield #### 4. The plan we are using now  What this figure shows is the detailed structure of improved plan 2 above, which we are using now. The solid lines and arrows with different colors in the figure represent the direction of the stream for different protocols, and the dashed lines and arrows represent the direction of the audio. You may need a little patience to understand what we are trying to convey in the context of the previous slides. This solution meets our needs for the time being and will not undergo major changes in a short time. However, it does have some points can be improved: 1. We haven't changed the way we handle low-latency streams for caster viewing, so according to our calculations, we can currently only provide two caster streams on our 30 Mbps uplink network. The test we are considering, but have not done is to run an **FFMPEG** on the LAN **SRS** server to push the low-latency stream to the **SRS** server for Chinses live streaming platforms by SRT protocol, and use the high downlink bandwidth of this server to provide more caster streams. We have not tested this improvement direction, and do not plan to test it in the short term, because we have limited time for each person. 2. This includes the greedy snake style server compromise we talked about above. In fact, I personally don't think we should have a problem with using such thing through **Edge cluster** if our two stream servers have a good enough internet connection. However, we don't have the time or money to test it. 3. We think this plan has very good scalability. **SRS** server provides recording function and transcoding function is a very good function extension point, it can realize the local video cut-recording function, and FFMPEG can support a lot of additional functions such as transcoding, watermarking. At the same time, we can also have someone using OBS related control on the stream PC. If the team has enough equipment and staff, you can even cut highlights from the match in real time for the after-game review. The only changes you need to make are configured **Ingest** takes all the original streams in the Lan **SRS**, configs this SRS with proper computing power and a larger hard drive (or even cloud storage) In short, the only thing holding you back is money and HR. # At last ## Why we do this? The answer is quite simple, because there are still many players in the mainland of China who play Planetside2, and also many players who abandon this game after the national server, running by JiuCheng, was closed, but more importantly, we are losing new blood. We love this game, and we want to do more than what we have done. We want to unite and attract new players. In the planning process, we also realized that this is not only a problem faced by Chinese players, but also all players. ## Why we public this document? In fact, we have decided to public this document to everyone since we teamed up our live broadcast team. What we want to do is to popularize this game. Games that have been running for such a long time and are still full of vitality are rare, but if there are no new players join in, they will inevitably dead eventually. We believe that Outfit War, an event of this scale, is a big event for the entire player group of Planetside2, and it could even have become an important opportunity for DBG to increase income and improve player cohesion and sense of achievement. However, the lack of exposure can only make such an important event become "the self entertainment of players", and we feel it is a pity and incomprehensible that failed to take advantage of this huge opportunity to publicize and popularize this game. We even heard that some outfit in Soltech gave up and said, "What's better if you only reward such a frame and flag?" Some other outfit members also suggested that "if the winner can customize a camo named by their own outfit to be sold on the market, how happy it will be". DBG could have made more positive comments and income from its hard work, but why did it stop before moving towards further success? What we hope is that DBG can realize the importance of regimental warfare for the whole game and player community, and they have the ability and motivation to build regimental warfare into a regular event. In addition, like ESL and Blast, it is not a bad thing to have a standardized production of live broadcast of the event by itself or an authorized organization, and distribute pure live stream images to authorized live broadcast hosts, so that more players and live broadcast hosts can participate in content distribution and discussion, which can even become a new profit point for DBG. We may not know how DBG views the Outfit War, but what we can see is that PSB, Honu community and a large number of community organizations and individuals who want to participate in the live broadcast of the Outfit War have contributed a lot of time and energy to this event. They have made a lot of efforts to promote and present the wonderful moments in the game by using the resources that are hard pressed, But they are also facing the problem of insufficient resources and support. Taking us as an example, the API of SolTech server failed to work normally for several months, but it was not repaired. As a result, we could not even use the game plug-in produced by the community like other overseas live broadcast hosts at the beginning, and other communities were unable to collect some key data. Fortunately, not long ago, Soltech API finally returned to normal, but this also made us realize that the important premise for players and communities to carry out activities is that DBG has the ability to provide these resources to the community. We are not here to blame DBG for its inaction. We just hope that DBG can listen to the opinions and suggestions of the community and provide more resources and facilities. This may not cost you a lot of money and energy, but it can make the community and the player community more powerful. In short, our purpose is as follows: 1. We hope that DBG can be more aware of the commercial value and brand influence of the outfit war, which is crucial to the promotion of the game. 2. We seriously believe that, in addition to the direct issuance of OB accounts, DBG should organize official media teams or issue authorizations to media teams to produce high level no-caster streams, and provide official media or players with game images in a reasonable way to facilitate their live broadcast of the games. And we seriously believe that this is what the authorities should do. We have proved the feasibility of low-cost production, but we do not have the funds and professional technology to support us to make high-quality live games, but you can, and so can organizations with more professionals and financial support. 3. We hope the official team can communicate more with the player community in terms of operation, listen to their needs and feedback, and even give some ideas on media operation to the player community for trial and error. If they have taken a sustainable development path, the authorities can recognize their efforts and give them support. Let the community and Jaeger server and test server as the experimental field for planning and community exchange of views, and even promote the transformation of the e-sports direction of PlanetSide2 and other unknown possibilities. None of us from the team are what called "professionals" and we are able to do this just because of small contributions from all the communities involved. Here we are truly grateful to anyone who have provided us with financial, technical and moral support. Without you, we couldn't have done these. If this article and the wired questions we've encountered can help you avoid some detours, then we'll be very happy, too.

catsaysmeow

2022年10月6日 01:14

转发文档

收藏文档

上一篇

下一篇

手机扫码

复制链接

手机扫一扫转发分享

复制链接

Markdown文件

分享

链接

类型

密码

更新密码